- Supermedic.ai

- Posts

- Global AI leaders agree on safety rules for the first time 📝

Global AI leaders agree on safety rules for the first time 📝

ALSO: Should You Use ChatGPT-4 for Medical Notes? How Do AI Models Learn? College Uses AI Mannequins to Train Future Healthcare Practitioners, AI Predicts Real-World Harm from Online Health Misinformation

Hey!

Welcome to this week’s edition of Supermedic, where we explore the latest developments in artificial intelligence and its transformative impact on healthcare.

Let’s get into it!

Victor

TODAY’S MENU

Global AI Leaders Agree to Safety Rules at Seoul Summit

Should You Use ChatGPT-4 for Medical Notes? Study Says No

How Do AI Models Learn? A Beginner's Guide to Machine Learning

College Uses AI Mannequins to Train Future Healthcare Practitioners

AI Tool Predicts the Real-World Impact of Online Health Misinformation

Read time: under 7 minutes

REGULATION

Global AI leaders Agree to Safety Rules at Seoul Summit

OpenAI's Sam Altman, xAI's Elon Musk, Google's Sundar Pichai and Microsoft's Satya Nadella. Composite image.

This week, an important AI governance conference took place in Seoul, co-hosted by the UK and South Korea. Sixteen leading AI companies, including Amazon, Google, Microsoft, Meta, and OpenAI, signed the “Frontier AI Safety Commitments.”

Key Points:

By signing the Frontier AI Safety Commitments, each company has pledged to develop and deploy their AI models safely and to publish safety frameworks detailing how they will assess risks.

These frameworks will define thresholds that indicate when risks are considered “intolerable” and outline the measures companies will take to ensure they do not exceed these limits.

Each company has also committed to being accountable and agreed not to develop or deploy an AI model or system if they cannot keep the associated risks below their specified thresholds.

Governments are also joining the party. The US, UK, Japan, and others announced new safety institutes to study AI risks, aiming for international cooperation despite geopolitical tensions.

Why it matters:

Having so many global AI giants agree to the same safety commitments is a historic first. Such unified regulations could provide the necessary accountability and transparency to harness AI's transformative potential in the healthcare industry. However, while these commitments are a significant step forward, they must be followed by concrete actions. The big question remains: Will these companies and governments truly uphold their promises?

THEY SUPPORT US 🩵

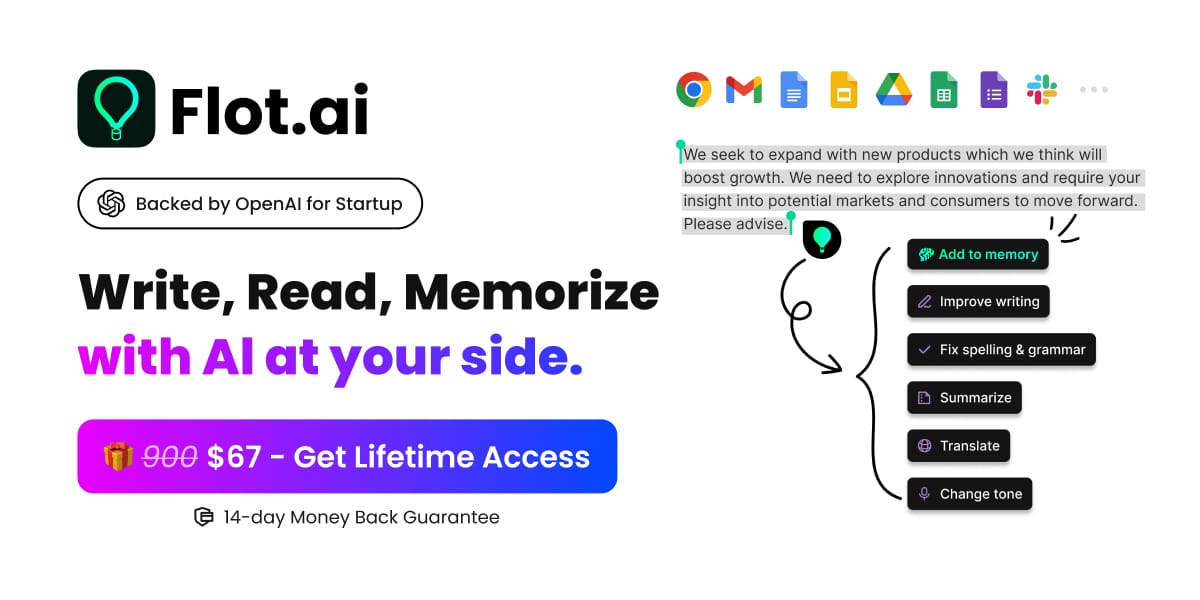

Your Everywhere and All-in-One AI Assistant

Imagine an AI companion that works across any website or app, helping you write better, read faster, and remember information. No more copying and pasting—everything is just one click away. Meet Flot AI!(Available on Windows and macOS)

CHATBOT

Should You Use ChatGPT-4 for Medical Notes? Study Says No

Ever thought of using ChatGPT-4 to generate your medical notes from audio recordings? With paid AI solutions like Deepscribe, Freed, or Nuance proving effective, it's tempting to try ChatGPT-4 as a free alternative. Here’s what you need to know.

A recent study tested ChatGPT-4's ability to create SOAP notes from clinical audio transcripts. The results? Not great. On average, there were 23.6 errors per case, mostly omissions (86%), followed by additions (10.5%) and incorrect facts (3.2%). The accuracy of the notes varied widely, especially with longer and more complex transcripts.

In short, while ChatGPT-4 has potential, it’s not yet reliable enough for clinical documentation. The errors and inconsistencies highlight the importance of sticking with professional solutions specifically designed for medical use.

Keep in mind that ChatGPT and similar large language models are evolving at lightning speed. What still isn't possible today may very well become feasible tomorrow.

P.S. A benchmark of the most popular AI medical scribe software is coming soon on Supermedic. Stay tuned!

LEARN ABOUT AI

How AI Models Learn? A Beginner's Guide to Machine Learning

As a regular reader of our newsletter, you often encounter the term Machine Learning or ML. But what does it really mean, especially in the context of medical research?

Machine Learning is like teaching a computer to learn from experience, much like you would teach a child to recognize different objects by showing them many examples.

In Healthcare, Machine Learning is applied through various methods to analyze data and make predictions. To better understand this, let's explore the three main types of Machine Learning commonly used: supervised learning, unsupervised learning, and reinforcement learning.

Supervised Learning

Supervised learning involves training an AI algorithm with labeled data to classify information or make predictions. Imagine teaching an AI to identify breast cancer by showing it thousands of labeled mammography scans. Each scan is tagged as ‘cancer’ or ‘no cancer.’ The AI learns to recognize patterns and make predictions, which are then compared to human diagnoses to check accuracy. This method automates tasks like pattern recognition, which are crucial in medical diagnostics.

Unsupervised Learning

Unsupervised learning, on the other hand, deals with unlabeled data. The AI analyzes here the data to find hidden patterns or associations. For example, you could feed an unsupervised algorithm a dataset of de-identified electronic health records (EHRs). The AI clusters similar data points together, potentially revealing new disease causes or patient subgroups. This approach can uncover insights that weren't previously obvious.

Reinforcement Learning

Reinforcement learning trains an AI by rewarding it for making correct decisions and penalizing it for wrong ones. This technique is useful for sequential decision-making processes, such as optimizing treatment plans. Take sepsis treatment: the AI evaluates patient data like vital signs and lab results, decides on interventions (e.g., fluid or vasopressor administration), and learns from the outcomes. Over time, it figures out the best actions to maximize patient recovery rates, guided by rewards for successful treatments and penalties for failures.

Source: AMA

Would you like to see more educational content like this in our future newsletters? |

EDUCATION

College Uses AI Mannequins to Train Future Healthcare Practitioners

Darlington College (UK) is set to transform its health and social care training with the introduction of AI-powered mannequins. These will be used to create realistic scenarios in a new facility that includes a hospital ward, GP surgery, and nursing home.

Key Takeaways:

AI mannequins allow students to practice various procedures, such as measuring blood pressure, taking blood, and performing injections, in a safe and controlled environment.

The mannequins come with speech recognition, AI voice responses, and physiology simulations, enabling students to build critical thinking and decision-making skills.

The new training approach aims to address the healthcare sector's significant staff shortages, with the NHS facing an 8.4% vacancy rate and social care experiencing a 9.9% vacancy rate.

The integration of AI technology in healthcare education promises to provide students with valuable hands-on experience and better prepare them for real-world challenges. As Darlington College leads the way in this innovative training method, it sets an example for other institutions to follow in tackling the pressing need for skilled healthcare professionals.

BIG DATA

AI Tool Predicts the Real-World Impact of Online Health Misinformation

Scientists have developed a new AI tool that can predict how misinformation on Reddit influences real-world behavior, like rejecting COVID vaccinations. By analyzing the wording and phrasing in Reddit posts, this AI model can foresee spikes in hospitalizations and deaths.

How It Works?

The tool tracks thousands of posts from anti-vaccine forums, even those that are banned.

It uses "fuzzy-trace theory" to understand the gist of messages, focusing on the underlying meaning rather than literal words.

Posts that imply a direct link between events, such as feeling ill after a vaccine shot, are particularly persuasive and influential.

This new approach could help scientists anticipate the effects of misinformation in future pandemics and other significant events, potentially guiding better content moderation and public health strategies.

Other AI Healthcare news :

Physiatry: Researchers introduces bio-inspired 3D programmable materials to help heal broken bones.

Oncology: AI shows promise in automating the staging process for oropharyngeal cancer.

Cardiology: A new study reveals a significant link between hot nights and increased stroke risk, particularly in the elderly and women.

Women Health: Ovum AI launches Australia’s first longitudinal dataset to revolutionize women’s healthcare.

Business: French healthtech company Doctolib announced its acquisition of Berlin company Aaron.ai, an AI-based telephone assistance solutions for medical practices.

Thanks for reading!

Let’s improve the newsletter together!🌟

What did you think about this weekly edition?Take a second to let us know by clicking your answer below. |

Have you heard of Prompts Daily newsletter? I recently came across it and absolutely love it.

AI news, insights, tools and workflows. If you want to keep up with the business of AI, you need to be subscribed to the newsletter (it’s free).

Read by executives from industry-leading companies like Google, Hubspot, Meta, and more.

Want to receive daily intel on the latest in business/AI?

Reply